Looking Back 20 Years: How an Academic Bet on Real-Time Data Finally Paid Off

Written By Claire Fu

Picture this: at 8 PM on a Friday, one person calls an Uber, submitting a request simultaneously alongside hundreds of other people. These requests are processed through Uber’s algorithm, which must consider thousands of variables, from driver locations that update in real-time to surge pricing calculations that change based on current demand, to determine a match between driver and rider. In sharp contrast to static data, which resides in a dataset and remains unchanged from day to day, Uber’s algorithm is a prime example of streaming data, in which queries are submitted in real-time and an answer must reflect the newest and most updated information at hand.

20 years ago, data infrastructure was typically built to handle static data, and those that needed to be “streamed” were updated periodically, in a process known as batch processing. However, this turned out to be a problem for time-sensitive information, such as when trading in the stock market or analyzing whether a credit card transaction is fraudulent. How can an algorithm make accurate decisions when the data is more than a day old? A steep drop in market prices, or a stolen credit card, could have easily occurred within that window and altered how an algorithm makes a decision, creating significant delays that generated a lot of frustration for data scientists.

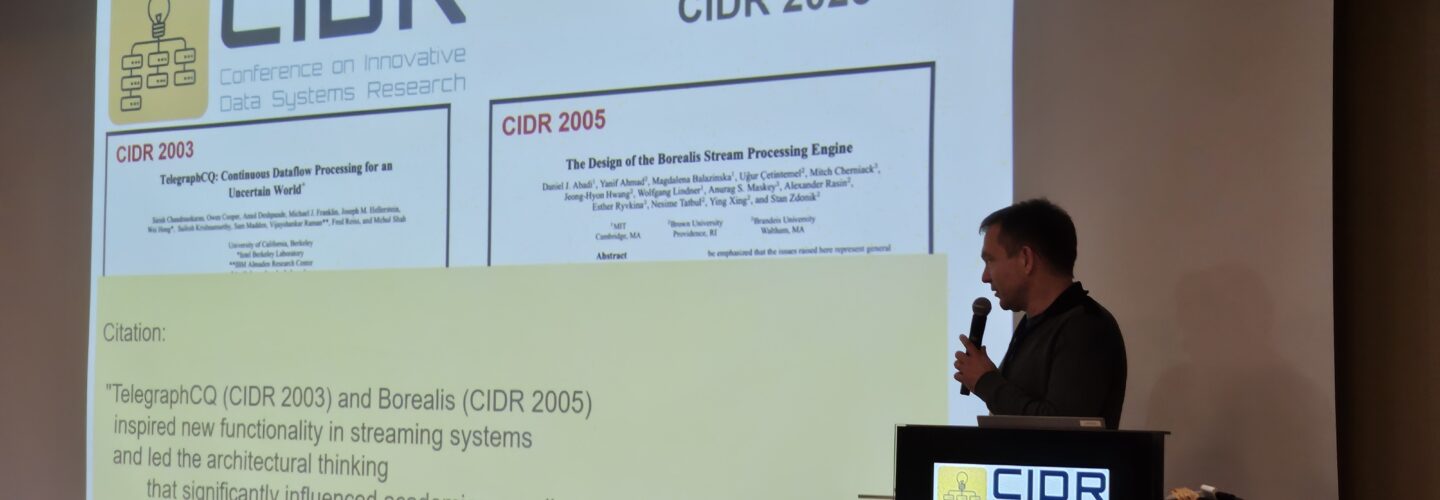

At that time, Morton D. Hull Distinguished Service Professor Michael Franklin, now at the University of Chicago, was part of a team of researchers at UC Berkeley that took on the challenge of creating a system that could handle the large flow of data in real time. Simultaneously, researchers at MIT and Brown were doing the same thing. Their respective systems, along with the papers detailing them, were released in the early 2000s at the Conference on Innovative Data Systems Research (CIDR) and earned both groups the prestigious Test of Time award in 2025.

“Research is often very ‘fad’-driven. People get excited about an idea and produce a lot of research relating to it, so a lot of the content in these conferences, and the Best Paper Award, is all in the moment,” Franklin states. “The idea behind the Test of Time award is that, looking back ten or 20 years from now, you gain a perspective on what work has lasting importance. So, it’s a real honor, because this is something that is given with a good deal of context and time that has gone by. We were very happy to receive this.”

In their paper, TelegraphCQ: Continuous Dataflow Processing for an Uncertain World, Franklin and his colleagues built off an existing system called Telegraph (named after Telegraph Avenue in Berkeley). The original Telegraph system was not designed for continuous data processing in real-time, so they expanded its capabilities to handle continuous queries — hence the addition of “CQ” to the name. The system leverages PostgreSQL, one of the earliest open source database systems, which uses SQL as its main programming language. In contrast, the MIT/Brown paper created a brand new system and interface language, which required more coding effort and a steeper learning curve for users.

“We broke fewer things,” Franklin reflects. “PostgreSQL has turned out to be by far the most popular of open source database systems so wee were way ahead of our time. It was a natural choice for usbecause it came out of Berkeley, so we knew the people who built it originally. We extended an existing database system instead of building everything brand new, and we used as much as we could.”

While some of the TelegraphCQ design decisions proved to be prescient, others were perhaps a bit too radical. For example, TelegraphCQ implemented a novel and aggressive form of adaptive query optimization. In a traditional database system, a query is written in SQL and compiled into an internal program before returning the answer. During this compilation process, the program analyzes all the different ways to get an answer and returns the most efficient one, based on different assumptions about the data and what will be done with it. In TelegraphCQ, they took an adaptive approach. As the data is constantly changing in real time, the most efficient query method is also altered on the fly, such that the chosen method is never constant as a query progresses. This created a few problems, including a degree of performance unpredictability beyond what is preferred for many real-world applications.

Stream query processing, despite its advances at the time, did not make as much of a splash in the early 2000s as Franklin and his colleagues had expected. In hindsight, there was not a huge market at the time for a continuous database system because companies and organizations that were using a lot of data had already streamlined their business processes around batch processing, in which they wait overnight to update the database and receive answers the next day. Most business processes at the time were not built to react immediately to new and constantly updated information, except for specific use-cases like credit card fraud detection, where hand-coded solutions had already been created for that purpose.

Now, businesses are catching up. In addition to the competitive advantage of being able to process data at a greater pace than a competitor can, the introduction of AI has made continuous processing an even more important feature. What used to be people sitting at the other end of a query are now AI agents that can run constantly in the background, making adaptive processing of streaming data a much more optimal fit.

“This is a classic example of a case where academics were willing to work on things decades before the market was ready for them,” Franklin stated. “Eventually, the techniques and the technologies that we came up with finally became valuable, because the market caught up. I believe that is why the awards committee decided to give the Test of Time award to us and to the MIT/Brown group. We made this bet. It didn’t look like the best bet for many years, but now it turned out to be a big deal.”

TelegraphCQ led to the founding of Truviso, a company that was later acquired by Cisco. Their foundational work, alongside the MIT/Brown collaboration, influenced the creation of multiple pioneering stream-processing frameworks, such as Kafka Streams and Apache Spark Structured Streaming. Apache Spark, co-founded by Franklin, became one of the most popular open-source big data processing systems used today.

Looking ahead, Franklin is dedicated to modernizing data management in the age of AI. His research focuses on three key areas: enhancing the integration of AI models and data management, building data systems optimized for AI agents, and collaborating with other researchers to create a universal data processing system that can handle image, video, and other non-traditional data formats.

“I love doing research in academia because we get to work on practical problems that were arguably ahead of their time, but that a company probably wouldn’t necessarily invest in,” Franklin says. “This is how we are building the computer science department here at UChicago. We are recruiting students and faculty who are motivated by practical problems and are willing to take intellectual risks to try new things.”