Designed to Deceive: Why Knowledge Isn’t Enough to Beat Dark Patterns

In late 2023, the Federal Trade Commission sued Amazon, alleging that the tech giant deceptively enrolled millions of users into Amazon Prime and deliberately complicated the cancellation process. These manipulative tactics, designed to coerce users into decisions they wouldn’t otherwise make, are known as “dark patterns” or deceptive designs. Yet, despite their pervasiveness across the Internet, regulations to protect consumers from these digital traps remain critically underdeveloped.

Associate Professor of Computer Science at the University of Chicago, Marshini Chetty, is at the forefront of data-driven research with the goal of making the Internet more trustworthy by combating dark patterns. Coming from a very different angle, Sidley Austin Professor of Law Lior Jacob Strahilevitz and Professor of Law at Northwestern University Matthew Kugler also research this issue from a behavioral standpoint. Merging Chetty’s technical expertise with the legal-behavioral approach of Strahilevitz and Kugler, the three collaborated to co-author the paper, “Can Consumers Protect Themselves Against Privacy Dark Patterns?” which received a Future of Privacy Forum’s 2026 Privacy Papers for Policymakers Award.

While dark patterns may seem inherently manipulative, attempts to regulate them have faced opposition—largely due to a lack of empirical studies quantifying their actual impact on user behavior.

“One of the arguments about misleading interfaces is that, once people know a dark pattern exists, surely, they can just avoid it and protect themselves against it, right?” Chetty asks. “Our study’s fundamental question is, if you have a privacy-related dark pattern, such as something that will make you give up more data than you would prefer to, are you able to protect yourself?”

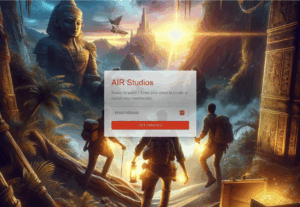

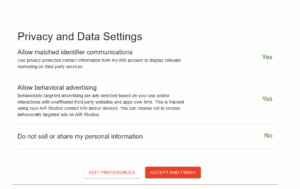

To study this issue, master’s student Chirag Mahapatra and high school student Yaretzi Ulloa designed ‘Air Studios,’ a simulated subscription video on demand platform modeled after the Netflix sign-up process. To test the efficacy of manipulative design, they observed over 1,500 participants as they navigated the platform. When asked for permission to collect data, users in experimental groups encountered various dark patterns. These included visual interference (highlighting the “accept” button over the “decline” button), obstruction (adding hurdles to the opt-out process), and nagging (repeatedly prompting users after they declined).

To study this issue, master’s student Chirag Mahapatra and high school student Yaretzi Ulloa designed ‘Air Studios,’ a simulated subscription video on demand platform modeled after the Netflix sign-up process. To test the efficacy of manipulative design, they observed over 1,500 participants as they navigated the platform. When asked for permission to collect data, users in experimental groups encountered various dark patterns. These included visual interference (highlighting the “accept” button over the “decline” button), obstruction (adding hurdles to the opt-out process), and nagging (repeatedly prompting users after they declined).

The results confirmed the power of these privacy-related dark pattern tactics. In the control group, where no dark patterns were used, far fewer users signed up for targeted advertising which would require them to give up more of their data. Conversely, the introduction of dark patterns significantly increased the number of users who consented to personalized ads. This susceptibility persisted even among users who were explicitly instructed to prioritize privacy-preserving choices. While trying to maximize privacy-preserving choices did provide some protection, the study demonstrated that dark patterns remain highly effective at manipulating the user’s behavior to the provider’s benefit.

The results confirmed the power of these privacy-related dark pattern tactics. In the control group, where no dark patterns were used, far fewer users signed up for targeted advertising which would require them to give up more of their data. Conversely, the introduction of dark patterns significantly increased the number of users who consented to personalized ads. This susceptibility persisted even among users who were explicitly instructed to prioritize privacy-preserving choices. While trying to maximize privacy-preserving choices did provide some protection, the study demonstrated that dark patterns remain highly effective at manipulating the user’s behavior to the provider’s benefit.

“When you’re trying to get companies to change their interfaces to minimize these kinds of manipulative interfaces,” Chetty said, “it’s helpful to document both the qualitative experiences of how people feel about these interfaces and experimental evidence of how these interfaces impact people’s behaviors. We show that even if people are given the goal of protecting their privacy, some of these privacy-related patterns still work against them. That is why this research is important to me.”.

In effect, Chetty emphasized, these kinds of studies suggest that the ubiquity of deceptive designs across the Internet and increasing awareness about dark patterns is not enough to help consumers protect themselves against the manipulative effects of these designs. It also makes a stronger case for creating regulatory protections to enhance consumer protections from online deceptive designs.

Beyond research, both Chetty and Strahilevitz have served as expert witnesses in large cases about dark patterns. Most recently, Chetty was involved in the lawsuit against Amazon, which resulted in a $2.5 billion settlement including $1.5 billion in customer refunds and a ban on using such manipulative practices in future.

To learn more about Chetty’s work, visit her lab page here.